As if defenders of software supply chains didn’t have enough attack vectors to worry about, they now have a new one: machine learning models.

ML models are at the heart of technologies such as facial recognition and chatbots. Like open-source software repositories, the models are often downloaded and shared by developers and data scientists, so a compromised model could have a crushing impact on many organizations simultaneously.

Researchers at HiddenLayer, a machine language security company, revealed in a blog on Tuesday how an attacker could use a popular ML model to deploy ransomware.

The method described by the researchers is similar to how hackers use steganography to hide malicious payloads in images. In the case of the ML model, the malicious code is hidden in the model’s data.

According to the researchers, the steganography process is fairly generic and can be applied to most ML libraries. They added that the process need not be limited to embedding malicious code in the model and could also be used to exfiltrate data from an organization.

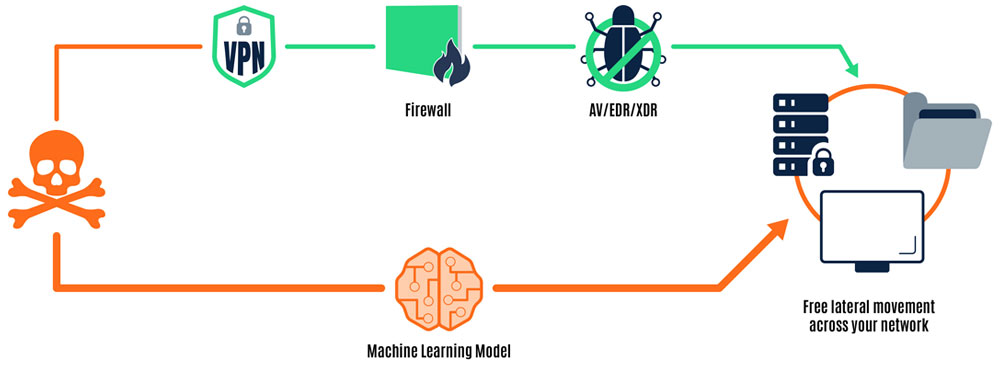

Planting malware in a machine language model allows it to bypass traditional anti-malware defenses. (Image courtesy of HiddenLayer)

Attacks can be operating system agnostic, too. The researchers explained that the OS and architecture-specific payloads could be embedded in the model, where they can be loaded dynamically at runtime, depending on the platform.

Flying Under Radar

Embedding malware in an ML model offers some benefits to an adversary, observed Tom Bonner, senior director of adversarial threat research at the Austin, Texas-based HiddenLayer.

“It allows them to fly under the radar,†Bonner told TechNewsWorld. “It’s not a technique that’s detected by current antivirus or EDR software.â€

“It also opens new targets for them,†he said. “It’s a direct route into data scientist systems. It’s possible to subvert a machine learning model hosted on a public repository. Data scientists will pull it down and load it up, then become compromised.â€

“These models are also downloaded to various machine-learning ops platforms, which can be pretty scary because they can have access to Amazon S3 buckets and steal training data,†he continued.

“Most of [the] machines running machine-learning models have big, fat GPUs in them, so bitcoin miners could be very effective on those systems, as well,†he added.

First Mover Advantage

Threat actors often like to exploit unanticipated vulnerabilities in new technologies, noted Chris Clements, vice president of solutions architecture at Cerberus Sentinel, a cybersecurity consulting and penetration testing company in Scottsdale, Ariz.

“Attackers looking for a first mover advantage in these frontiers can enjoy both less preparedness and proactive protection from exploiting new technologies, Clements told TechNewsWorld.

“This attack on machine-language models seems like it may be the next step in the cat-and-mouse game between attackers and defenders,†he said.

Mike Parkin, senior technical engineer at Vulcan Cyber, a provider of SaaS for enterprise cyber risk remediation in Tel Aviv, Israel, pointed out that threat actors will leverage whatever vectors they can to execute their attacks.

“This is an unusual vector that could sneak past quite a few common tools if done carefully,†Parkin told TechNewsWorld.

Â

Login or register to post your reply